independent-research

| Home | Independent Research | Last | Next |

Index

I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio. Generative adversarial nets. In Advances in Neural Information Processing Systems (NIPS), pages 2672–2680, 2014.

Motivation

Deep learning has achieved great performance in supervised learning in discriminative models. However, deep generative models have had “less of impact” for:

- It is difficult to approximate the computations in maximum likelihood estimation

- It is difficult to leverage “the benefits of piecewise linear units in generative context” The paper proposed a new generative model: generative adversarial nets (GANs) to avoid these difficulties.

Approach

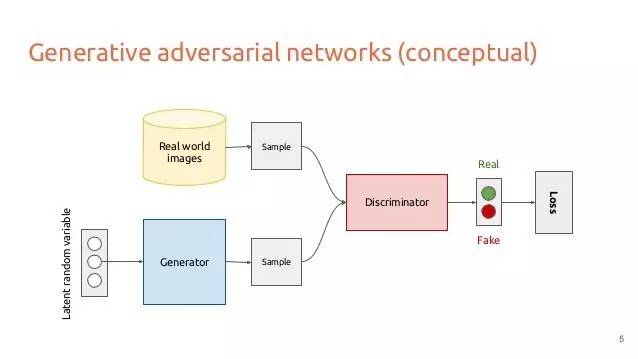

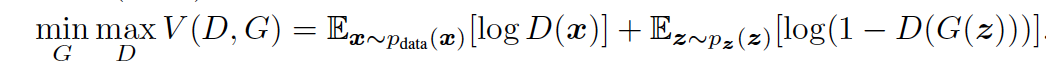

The framework of GANs corresponds to a “minimax two-player game” (discriminator vs. generator) with value function V(G, D):

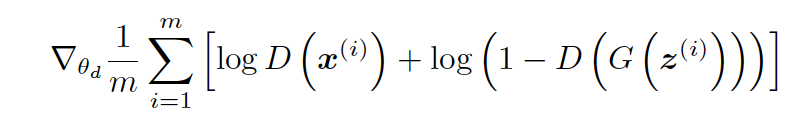

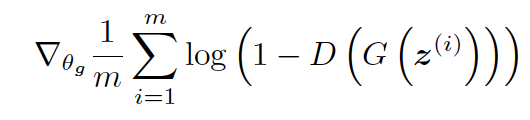

Generator generates noise samples from a prior distribution and discriminator represents the probability of the data come from the target dataset rather than generator. Hence the target is to minimize G (generator) while maximum D (discriminator). In practice, the procedure optimizes D k steps and one step of G.

- Update of discriminator

- Update of generator

The paper also proved that when the training reached its global optimality. The distribution of samples from generator converges to those from target dataset. The model was tested on MNIST, TFD and CIFAR-10 datasets. It used sampling techniques to avoid directly estimating likelihood meanwhile achieved decent performances.

Limitation

- It requires new metrics to evaluate the performance of such generative models. (Inception Score, Frechet Inception Distance, BLEU, etc)

- It seems not applicable in discrete data.