independent-research

| Home | Independent Research | Last | Next |

Index

F.A. Gers, J. Schmidhuber, and F. Cummins. Learning to forget: continual prediction with LSTM. Neural Computation, 12(10):2451–2471, 2000.

Motivation

Although previous Long Short-Term Memory (LSTM) model outperformed traditional Recurrent Neural Network (RNN) in many areas. It still has limitation in “processing continual input streams withouth explicit marked sequence ends”. This work inctroduced forget gate, which “enables an LSTM cell to learn to reset itself at appropriate times” to solve the problem.

Approach

Based on “standard” LSTM then, which included input gate, output gate and memory cell, the activations look like:

-

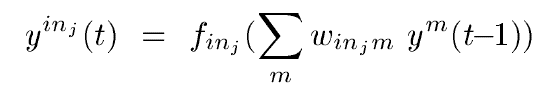

Inpute gate:

-

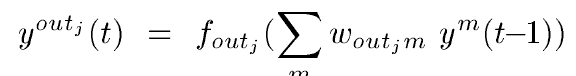

Output gate:

-

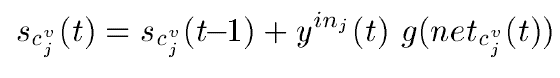

Memory cell:

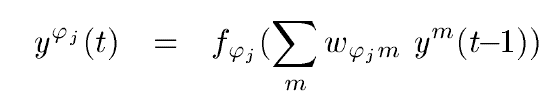

The paper proposed extended LSTM, which uses adaptive forget gate to “reset memory blocks once their contents are out of date”. Simply by replacing “Constant Error Carousel” (CEC, 1.0) by the multiplicative forget gate activation:

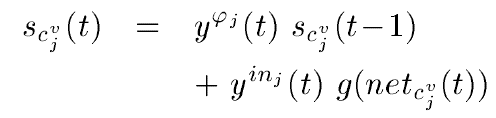

Thus the memory cell activation turns out to be:

The backpropagation process could be found in previous note.

The proposal was tested on “embedded Reber grammar” (ERG) benchmark problem: the second and penultimate symbols are always the same, and std LSTM outperformed other RNN algorithms. Extended LSTMs with forget gates were tested on continual variant of the ERG problem, which does not ““provide information about the beginnings and ends of symbol strings”, and extended LSTM with learning rate decay achieved the best results.