independent-research

| Home | Independent Research | Last | Next |

Index

G. Chen, “A gentle tutorial of recurrent neural network with error backpropagation,” arXiv preprint arXiv:1610.02583, 2016

Motivation

Focus on basics of backpropagation in recurrent nerual networks (RNN) and long short-term memory (LSTM).

Approach

- RNN

The paper introduced the objective function of RNN:

Also three different types of parameters/weights: input layer to hidden layer (Wxh), hidden layer to output layer (Whz) and hidden layer between time sequences (Whh). Based on chain rule and total derivatives, using backpropagation to compute their derivatives:

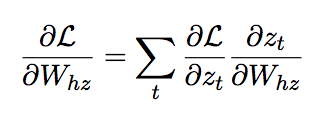

Whz:

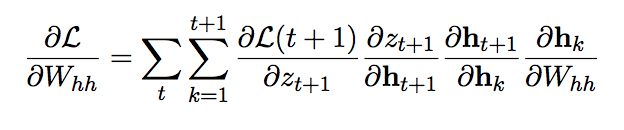

Whh:

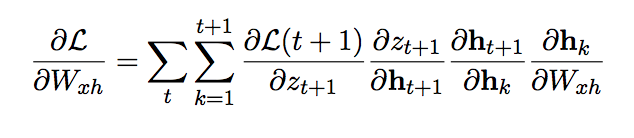

Wxh:

- LSTM

Based on RNN, the paper explained the structure of LSTM - including four gates: input modulation gate, input gate, forget gate and output gate along with their corresponding weights:

| Gate | Xt | ht-1 |

|---|---|---|

| Input Modulation Gate | Wxc | Whc |

| Input Gate | Wxi | Whi |

| Forget Gate | Wxf | Whf |

| Output Gate | Wxo | Who |

Also introduces the memory information (cell) and be able to handle long sequential better.

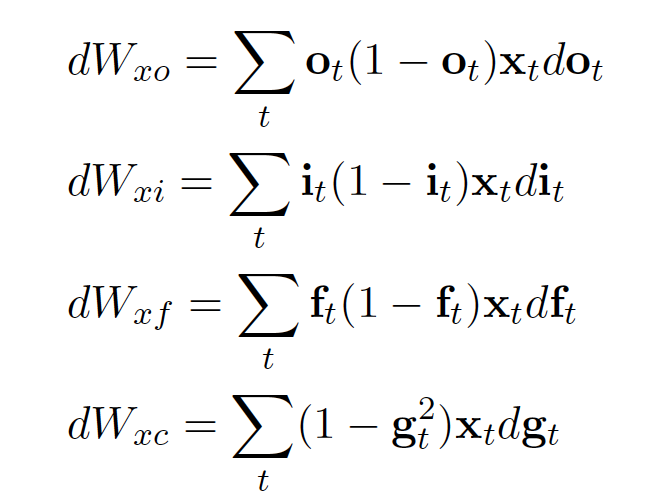

Using backpropagation, the derivatives of weights respect to X look like:

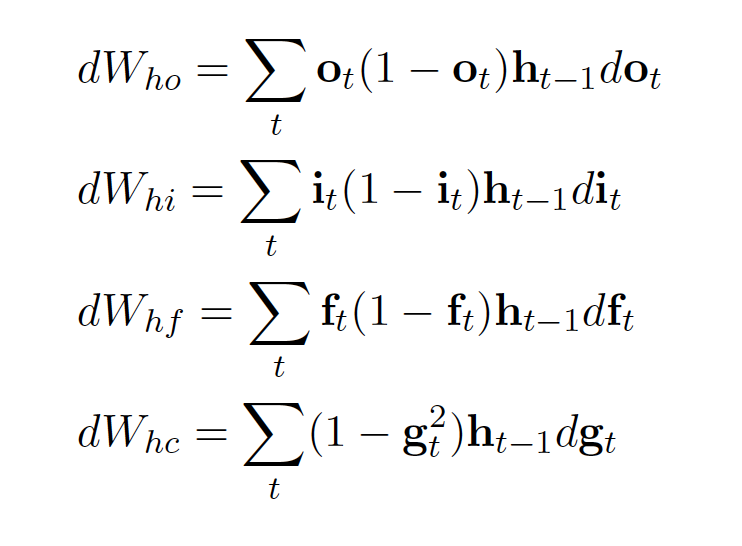

the derivatives of weights respect to ht-1 look like: